The National Laboratory of Frascati’s Tier2 is one of the data centres comprising the WLCG (Worldwide LHC Computing Grid), the largest existing computational grid.

WLCG is a global infrastructure implemented in order to provide computing resources to store, distribute and analyse about 30 Petabytes (30 million Gigabytes) of data generated each year by the Large Hadron Collider (LHC) at CERN, making them available to all partners of the collaboration, regardless of their geographical location.

WLCG is the result of a collaboration of national and international computing infrastructures coordinated by CERN and operated by an international collaboration of between the four LHC experiments (ALICE, ATLAS, CMS and LHCb) and of the participating computing centres.

42 countries, 170 computing centres, 2 million jobs executed each day.

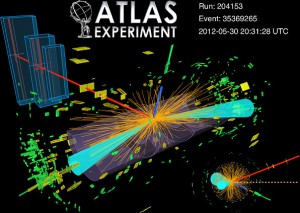

The WLCG calculation allowed physicists to announce the discovery of the Higgs boson on 4 July 2012!

The WLCG infrastructure consists of multiple layers or tiers: 0, 1, 2, 3 with different service levels.

Tier0 is the CERN data centre, situated in Geneva, is responsible for custody of the raw data and performing a first data reconstruction.

The Tier1, 13 large computing centres with ample storage capacity, are responsible for a percentage of the data and perform large-scale computations.

Finally, the Tier2, which store a part of the data, mainly provide computing power for analysis and the Monte Carlo simulation.

One of the Tier2, mainly dedicated to calculation for the ATLAS experiment, is hosted at the INFN National Laboratory of Frascati.

This centre, in addition to the ATLAS experiment, also supports the activities of other experiments, such as: KM3NeT, Belle II, the other LHC experiments and, within the scope of a collaboration between INFN and INAF, CTA (Cherenkov Telescope Array).

The CTA project is in fact considered strategic for both institutions in the coming years, in order to develop in synergy calculation-related aspects, integrating the different skills and experience in managing large computing infrastructures (INFN) and implementing software for data analysis and astronomy archive and database management (INAF).

The INFN National Laboratory of Frascati, in collaboration with INAF and other research institutes participates to the I.Bi.S.Co project (Infrastructure for BIg Data e Scientific Computing) within the National Operative Program on Research and Innovation (PON) of 2014-2020.

The goal of I.Bi.S.Co is to enforce the existing scientific computing infrastructure in South Italy, composed by the computing centers of the INFN sections of Bari, Catania and Napoli, already funded by the PON of 2007-2013. The aim of I.Bi.S.Co is to realize a multi-disciplinary and multi-functional infrastructure, available also to other italian research institutes.

The I.Bi.S.Co project is the first step towards an Italian Computing and Data Infrastructure (ICDI) through the realization of its souther part (ICDI-sud). The INFN National Laboratory of Frascati has been included in this project and will serve as a connection between the ICDI-sud and the central Italy.

The I.Bi.S.Co project will have a duration of 32 months and, beside INFN, the other proposing co-partners are: Università degli Studi di Bari Aldo Moro, Università degli Studi di Napoli Federico II, CNR, INGV and l’INAF.

This latter enters in the project throught the collaboration with the INFN National Laboratory of Frascati for the CTA project.

The partner-ship has also obtained funding from MIUR for man power to be devoted to the I.Bi.S.Co. infrastructure.

Finally, Tier 2 also includes a number of initiatives useful for the calculation of local groups and of the ATLAS collaboration, for example:

- participation in the management of the ATLAS Virtual Organization (defined as the set of resources, people and policies of the entire experiment community),

- participation in the development and testing of the DPM (Disk Pool Manager) software: a software for storage system management in the Grid,

- participation in the IDDLS project to investigate and test, with real use cases, technologies of programmable networks for the interconnection of computing centers; creating a prototype of a federated storage infrastructure, or DataLake, at the service of high-energy physics experiments, in the direction of optimizing the use of resources and expanding them by also accessing commercial clouds. The ultimate goal is to have a pilot Italian DataLake connecting the Tier1, some Tier2s and some data producers.

INFN-LNF Laboratori Nazionali di Frascati

INFN-LNF Laboratori Nazionali di Frascati