The deep learning of the Universe

By Viviana Acquaviva

The terms “artificial intelligence” and “machine learning” have now become ubiquitous in any scientific field, from biology to chemistry, up to medicine, including, obviously, physics. However, it is hard to imagine a discipline that underwent such a swift and substantial methodological revolution as astrophysics did.

The reasons for this change are partly to be found in the very nature of the investigation of the Universe. The methods of artificial intelligence, from machine learning to data mining, are ideal to describe and investigate phenomena whose physical models are not sophisticated enough to grasp their complexity. Typically, this happens when we deal with extremely complicated objects, described by a very high number of variables, or when our understanding and interpretation of physical phenomena is limited. And this description fits like a glove plenty of astrophysics issues, from galaxy formation to stellar dynamics, from cosmological simulations showing the dark matter distribution in the Universe, to the detection of variable signals in a data stream. Considering also that missions like the Large Synoptic Survey Telescope (Lsst) will generate in a few years a hundred times the amount of data produced by the previous generations of observatories, making some of the current methods – such as hand search – totally obsolete, no wonder astrophysics is one of the fields that adopted the new techniques quickly and probably irreversibly.

But which are the most successful machine learning applications in astrophysics and cosmology? The list is certainly too long to be complete. A great strong point of these techniques – especially of deep learning – is their effectiveness in analyzing multidimensional data, such as images, containing information often difficult to describe analytically.

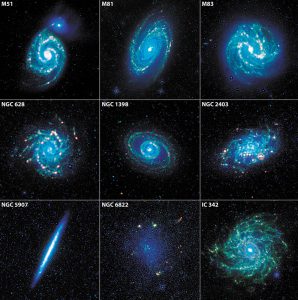

For example, identifying a spiral galaxy, seen from different angles, is a relatively simple task for the human eye (and brain), but writing down a mathematical formula – a model – capable of “searching” for this galaxy in the image and taking into account all the possible perspectives is extremely complicated. Here neural networks can help, since their architecture allows to easily capture spatial correlations in two or three dimensions, provided that the system has a series of representative examples to learn from, kind of like a kid easily learns how to recognize animals after seeing them a few times in pictures. It is not by chance that one of the first applications of machine learning to astronomical data was the classification of galaxies according to their morphology, in collaboration with the Galaxy Zoo team, in which the public was called upon to create, based on “human” recognition, a collection of representative elements that could guide the algorithm.

More generally, the ability of neural networks to analyze images enables machine learning to improve the quality of data analysis compared to traditional methods, for which the same pictures of stars and galaxies appear clearer and richer in information when analyzed with the new methods. Furthermore, it allows to capture information “hidden” in complex structures, like the details of statistical properties of the maps of the cosmic microwave background radiation, or the “weak gravitational lensing“ (that is the distortion the images of far galaxies are subjected to because of the deflection experienced by light due to the matter it encounters on the path between its source and the Earth). This opens also a new promising direction in the field of cosmological simulations, which generally require the use of high computational resources. Certain types of neural networks – like the Gan ones, born only in 2014 as algorithms to rule out false data – have been successfully used to generate new data and expand the volume of simulations, with a much lower computational cost. Improving these methods will make it possible, in the future, to obtain high-resolution cosmological simulations on very large volumes, which today is prohibitive due to the excessive time required. Rnn are suitable for a very effective analysis of data in time sequence (time series), that are essential to promptly detect variable sources, such as supernovae or cosmic rays, and will certainly be crucial in the Lsst data analysis, as well as in the emerging field of multi-messenger astronomy, the combined analysis of signals of both electromagnetic and gravitational origin. But there is more than neural networks. On one hand, astrophysics is not just about images. On the other hand, all deep learning methods tend to sacrifice interpretability in favour of precision. In other words it is hard to understand why a neural network takes a certain decision and – consequently – to learn new physics. For this reason, while the development of instruments that could decode the neural network output is becoming an increasingly discussed topic, other simpler and more transparent methods have powerful applications in areas in which models are lacking.

A good example is the galaxy-distance calculation based on photometry (photometric redshifts). In this case, machine learning methods like Randon Forest (a particular combination of BBt), Principal Component Analysis Decomposition, or even just linear combinations of empirical spectra, are often more precise than the methods based on galaxy energy emission models. Then, we cannot forget to mention the importance of machine learning techniques in relieving the increasingly burning problem of representation and compression of information contained in data. The volume of data produced by the new generations of telescopes (getting back to the Lsst case, of the order of tens of terabytes per night) can not be handled by a user working on his/her personal computer and, obviously, the situation gets worse if we consider derived products like catalogues, probability distributions of parameters, and so on. The wonderful and dutiful practice of public sharing of data, both original and derived, typical of astrophysics and pillar of the reproducibility of the results required by the scientific method, risks to run aground for a technical problem of access to the same data. While some of these problems will only be solved with the allocation of new resources, machine learning can help with the use of specialized techniques – known as “dimensionality reduction” – which allow to identify the most interesting projections in the data space and to reduce their dimension, with the slightest sacrifice in terms of information content.

Lastly, it is good to remember the revolution created by the spreading of machine learning methods has profound implications on a human level. It changes the figure of the researcher who becomes perhaps less specialized and has to know programming and data visualization languages and become – now more than ever – a “narrator” of complex and fascinating stories. There are more possibilities of interdisciplinary research, in which data science provides the common language that has been lacking so far among statisticians, computer scientists and astronomers. Moreover, new career opportunities are opening up, between astrophysics and computer science, as shown by new professional profiles, like the “support scientist”, increasingly popular in job advertisements. And, above all else, it is important to keep in mind these changes in training tomorrow’s physicists, so that they can make the most of this exceptional opportunity to analyze data in ways hitherto impossible or unknown, and discover new truths about the Universe around us.

Translation by Camilla Paola Maglione, Communications Office INFN-LNF

INFN-LNF Laboratori Nazionali di Frascati

INFN-LNF Laboratori Nazionali di Frascati